Everyone and their grandmother are talking about the impact of AI. That is understandable since the topic has been so hyped in the last few months.

AI – Why the hype?

It has become mandatory for any public company executive that does not want to see their stocks plummet baldly to announce that if not, their organization is transforming into an AI company – at least they are stuffing AI in everything they can think of, and it will be awesome!

Artificial Intelligence has immense potential to solve certain problems. However, we have yet to develop AGI, which can solve and create problems independently. For now, generative AI’s greatest realized potential is generating media hype.

AI challenges (even the name itself) the core of how we perceive ourselves and human intelligence. Its rapid advancement touches us on a fundamental emotional level. It is perhaps a time to have a more grounded and realistic discussion about what AI progress means. If not for the whole of humanity, at least in the sphere where I have spent all my career so far – software engineering.

And no – ChatGPT did not write this blog post! Romantically enough, it is generated by the wetware of a traditional human neural network running on caffeine. If you are reading this past 2024, when we are promised that all content will be AI-generated – this is a luxurious hand-made piece of work – like a Rolls Royce experience, if you will.

An emerging property, the Copernican principle of intelligence or why now

We have tried to create a working machine intelligence since we had machines. Still, in recent decades we have been able, for the first time to produce significantly more complex machines than ever before. A recent Intel i7-12700K has over 9 billion transistors – the human brain, for comparison, runs on about 80 billion neurons. That is to become important when talking about large language models below.

We are still trying to figure out what it takes for what we consider intelligent to emerge. We have tried multiple ways to achieve intelligence through machines, specialized programming language paradigms, logical paradigms, specialized hardware, etc., but to no significant effect.

One of the most publicized milestones in machine intelligence development was the victory of Deep Blue over Garry Kasparov in 1997. This, however, had nothing to do with intelligence (as we define it). Rather, it was a quick assessment of boards and navigating through a tree data structure with a lot of such boards. The moves taken by each player (and the board positions they led to) determined which board is the desired next move.

Since 1958, we have attempted to replicate thinking using neural networks. The Perceptron was the first experiment of this kind.

It seems size does matter when it comes to neural networks. This is just another piece of evidence that makes our intelligence much less miraculous. It seems an inevitable side effect of growing neural networks in animal brains.

Computing has evolved. We have increased the number of neurons and parameters in networks. We have also enlarged the size of the data sets used in training. Both aspects, until recently, were quite limited.

With the major cloud computing adoption, both limitations were rapidly overcome. Most large language models in 2023 have anywhere from 30 billion to a rumored 100 trillion parameters (ChatGPT 4).

We now have such an explosion in usable Machine Learning algorithms because we have access to larger resources and data. This enables us to train and execute vast neural networks, which in turn deliver more exciting results.

From the recent developments, I can conclude a few things:

- What we usually refer to as intelligence seems to be an emergent property of large neural networks that can change their internal connectivity (e.g. training).

- There seem not to be any significant hurdles to specific applications of intelligence (provided you do not expect an expert level). If you have a big enough neural network and enough data to train it on a particular problem – e.g. generating text.

- The equivalent of Moore’s law in AI has played its course in the past 7 years (model growth based on a number of parameters – latest models, say Chat GPT 4, is rumored to approach 100 trillion parameters, vs 175 billion for 3.5) and are becoming economically unfeasible for further scaling up for mass usage.

- The sweet spot in terms of parameters for generative models seems to be somewhere between 100 and 170 billion parameters.

- Based on the previous two conclusions and the comparison of the performance of GPT 3.5 vs 4, I do not expect major advancements in the capabilities of generative models in the next year since the advancement will happen based on training (which takes longer) and not just number of parameters, which is faster to achieve, but seems to have run out of steam at the moment in terms of what it delivers.

- Prompt engineering will not become a profession by itself (the accumulation of prompt libraries and experimentation drives the current demand). It will not last in the mid-term future. Using prompts to prime or extract what we need from AI tools will become a mainstream ability, such as googling since it does not require much special knowledge, and the models themselves only have a few custom parameters that need a technical background.

What will AI NOT change about software development – a realistic outlook

Let’s get the obvious question out of the way first. AI will not replace software engineers soon but AI in software development will enhance some aspects of their work.

The main reasons are that to prompt for a code; you must have a degree of professional knowledge. Then to integrate the code into the existing codebase, you must understand both programming and the codebase. A degree of understanding that is hard to be achieved by the current AI capabilities.

However, some aspects of the work will change significantly. In terms of quality and speed of the software development process – there have been many studies, some dating back to 1977 (Coding War Games). Surprisingly, technology, such as programming languages, and pay grade do not significantly impact the speed of coding. This holds true unless the programming language is Assembly or the experience level is under 6 months with the technology used.

Developers that aimed for zero defect delivery did not deliver noticeably slower. More experienced personnel tend to have better soft skills and political astuteness. Their experience allows them to challenge decisions they know would be sub-optimal. And that is where their real value lies – not the speed of code delivery necessarily.

The main differentiators in terms of speed of delivery come mainly to two things:

Work culture in the company or the team. Are the engineers you work with overlooking software quality? What kind of work ethic do they share, what is their outlook on deadlines, etc.?

The data shows that the best-performing developers cluster in some companies. The same is valid for the poor performers – there is a normal distribution here. If a team pushes to deliver speed and higher quality, so will most new team members put in that team. So be careful who you team up with!

Work environment matters. Companies with quiet, less intrusive office environments tend to produce better results in speed and quality of software delivery.

Based on these, the best companies outperform the worst by 11.1 times in speed of delivery. Probably this is how the myth of the magical 10x software engineer was born.

There is another discussion on the existence of the 10x developer’s evil twin – the Net Negative Producing Programmer (aka NNPP). The one who creates a lot of mess. This mess takes more time to fix than the developer’s contribution to the team effort. Bear in mind the statement for performance in the original research was made about companies and not individuals.

A system is as weak as its weakest point, and the human brain becomes the weakest point of the software development life cycle. We cannot improve the human brain exponentially, but we can take it out of the process. That is where the focus of improvement will be in the next decade. Hence the so far unfounded fear amongst some engineers.

AI is not replacing developers shortly because of several hurdles, not necessarily technical in their nature. Someone must be able to explain results and bear responsibility for actions taken by systems and legal issues. Someone has to control the systems we did not create.

The development of programming languages in the second part of the 20th century is largely misunderstood. People believe it was about speeding up development, but this is incorrect. There is sufficient data to claim that a Cobol or a Fortran developer can solve a task in about the same time as a C#, Java, or C++ developer. The difference is that OOP languages give us paradigms to understand bigger codebases by structuring the code in different paradigms, reusing and associating ideas.

The same goes for microservices. Although the concept is starting to receive some bad press as of late, microservices undoubtedly help us have that comfy feeling of understanding the whole codebase we are working with (the ideal size of a microservice – the amount of code a brain can understand in detail at one time in one domain).

So, the development speed will improve, but not because code will be produced faster. It still needs a human brain to process and integrate it. Expect improvement but not a 10x one.

The speed of delivery increase is primarily the result of the quality of generated code for tedious purposes and the automation of repetitive tasks. For example, writing most of the unit tests – some will still need to be created by humans – to be discussed further in the post.

How AI will change software development and applications: improvements and risks based on our experience at Scalefocus

Currently, our company is measuring KPIs to establish the exact numbers with statistically relevant sample sizes. There is already emerging evidence of improvements or changes in development KPIs in some areas because of the implementation of AI-powered tools.

Please stay tuned for more posts when we have the exact numbers to share in a few months. At the moment, our experience is mainly with GitHub Copilot and CodeGPT primarily.

Speed of delivery

The increase in speed of delivery is not necessarily due to the reason most people will bring up – generating lines of code instead of writing it, as I already discussed.

Developers still have to go through the code and validate it. For simple enough tasks, this takes time comparable to the effort to write the code (P problem). The chance of typos and bugs caused by small logical inconsistencies is greatly reduced. This eliminates the number of instances for debugging and refactoring of the code. But it is not a time-saving measure, which I will explain further.

We are currently measuring the delivery speed in mean story points completed for a sprint on that front.

Tradeoffs – Caveat emptor

Although the speed of delivery increases, so does the pull request sizes since code is being generated more quickly and easily. You should keep track of your average PR size per git repo (excluding mono repos – there, you should track individually per microservice or whatever logical or deployment units you are using).

Bigger PRs are harder to review, merge, etc., so the time spent reviewing each code chunk is decreased. We are monitoring PR sizes to deal precisely with this issue.

Quality

Using semi-ready code definitely helps with the quality baseline of the codebase, both in terms of various defects and having the average solution in most cases, both as performance, memory usage and code complexity. Having the first solution that comes to someone’s mind is not always average – so we have to refactor it sometimes.

Tradeoffs

Although correct, applying various code snippets to your codebase makes subsequent debugging harder. They might have vastly different code styles and not follow the overall logic of dealing with similar problems elsewhere in the codebase. Unfamiliarity can lead to slower debugging or wrong decisions taken while debugging unfamiliar code that was generated.

Software testing coverage

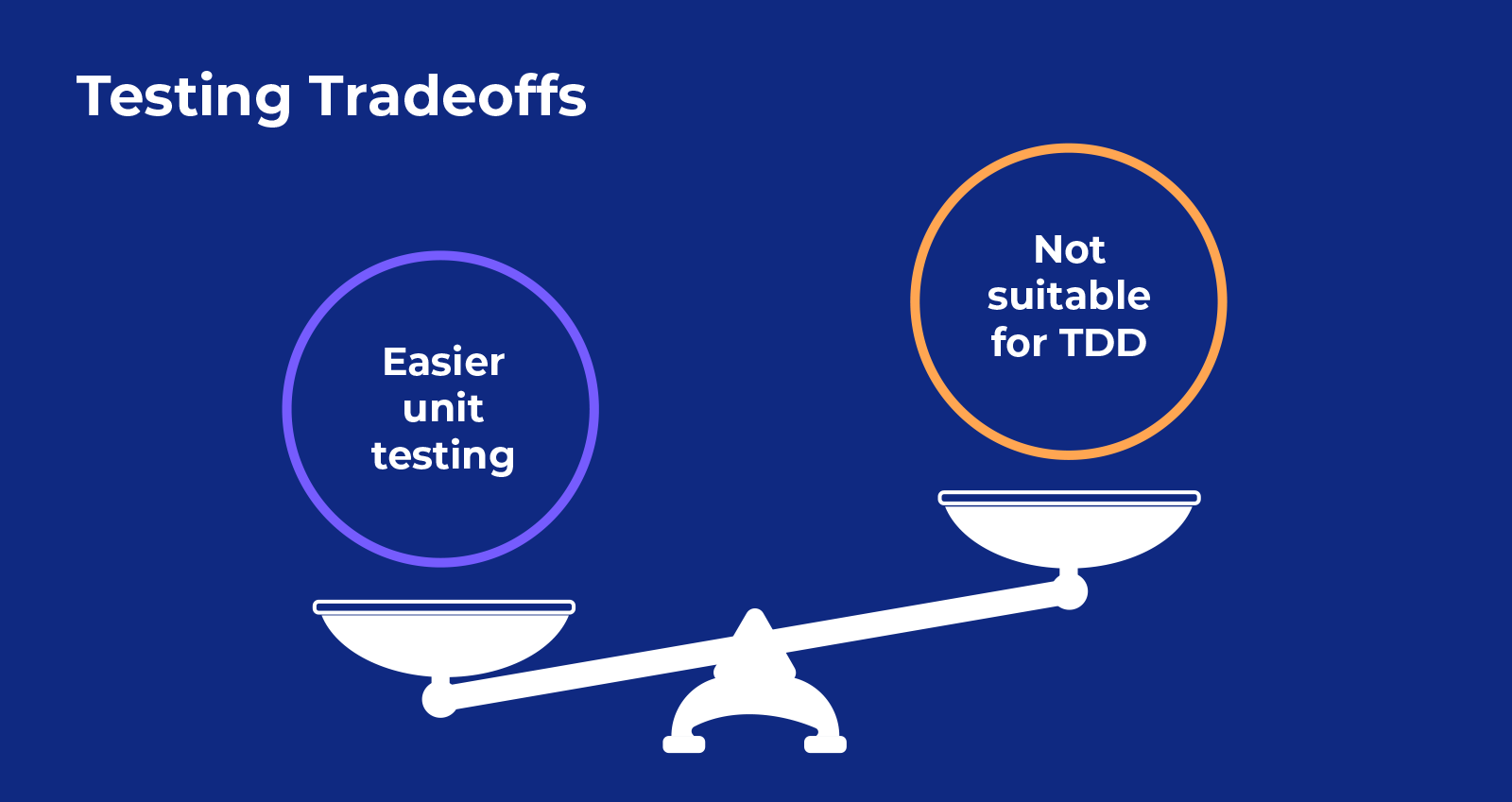

You will probably not be able to do proper TDD with the current AI code generation. AI generative models can be used to generate parts of unit tests.

This is an obvious application, regardless of the programming language used. Once you have code structures in place, the application of generative models is quite clear. Test coverage multiplies when you start using code generation since writing unit tests, becomes convenient.

Tradeoffs

Relying on AI-enabled models to generate test cases usually results in code-centric unit tests. Business-relevant test cases should be added. Further test cases should be created whenever a bug is found to ensure it does not re-emerge.

These practices tend to be forgotten once we rely more on generated test cases. We less often update test cases that we did not create. We are keeping track of the test coverage in two ways – through IDE statistics and SonarQube statistics.

Maintainability

Here we have fewer opportunities and more risks that should be managed.

I mentioned the varied code styles you could get with extensive use of generative models creating snippets for your teams and the increase in PR sizes. Another important metric you should be aware of is defect resolution time. It might increase noticeably when developers have to deal with a patchy codebase.

Another risk is the tendency of developers to change a faulty piece of code with a generated one, instead of understanding the problem and fixing it. Again – easy code generation enables and even encourages such behavior. Depending on how coupled the codebase is, this practice may result in unforeseen side effects or bugs.

Security

Your code security will generally benefit from the AI generative tools. They set a baseline when less obvious security issues will be introduced by generated code.

Tradeoffs

On the other hand, not having the context of your codebase when generating code can introduce security issues. This is due to the way the code interacts with your existing code. You should not throw away your security static code analysis and composition analysis systems. The generated code might sometimes introduce a dependency on a compromised library.

As an industry, we will see an increase in more diverse attacks on various levels of applications and infrastructure. Finding security issues with open-source software by generative models will definitely lower the bar for attackers.

Training of young engineers

Pairing less experienced engineers with a code generation tool is beneficial, especially in remote setups. It helps them learn quickly how to handle common software development issues. They will need to know how to describe the problem and solution for this to be effective. It ensures a baseline for their code output regarding performance, security, lack of code smells, etc.

Tradeoffs

Your engineers will learn to rely on generated code rather than understand the software’s business logic. This will decrease their ability to extend the functionalities and troubleshoot issues effectively.

AI tools cannot replace mentoring. Young engineers learn much more from their more experienced colleagues than just coding. They get the company’s culture, work ethics and discipline, problem-solving and dealing with issues at work – communication and much more.

They become senior engineers by gaining experience and observing the actions of their experienced mentors. This involves not only learning the technologies used but copying their mentors’ behavior.

In short, you cannot get a senior developer by isolating an engineer and giving them access to AI tools.

Overall developer satisfaction

Any service created for people (most hyped AI services fall in that category for now) needs happy users to exist.

On the one hand, code generation is like an intern that does your menial tasks for you. On the other hand, it can take away the mental challenge of solving a problem. This challenge is often what gives developers such satisfaction. Initially, there tends to be a spike in satisfaction until the excitement of the new tool wears off.

We are monitoring the NPS of our developers internally using AI tools for code generation. That helps us get to know the trends in their satisfaction when regularly utilizing AI tools.

Intellectual property

If you go back in time and give Shakespeare a copy of his sonnets and he likes them so much that he copies them – who would have written Shakespeare’s sonnets?

The lack of explainability on how an AI model works puts us in very much the same position. This is valid for code, text, images, video – really any content that AI conjures up.

An even bigger issue is how you can really be sure that your organization or customer’s code is not used for further training and offered externally to other organizations.

We ask our customers’ permission to use AI generative tools with their codebase. This is because there is no explicit legislation or regulation on the matter yet. With regards to code generation, I doubt there will be soon. There are various reasons that can be a basis for another blog post.

Conclusion

How we create software is changing, bringing more opportunities than risks. Still, we should be aware of the risks and manage them accordingly.

The future of software development belongs to the companies that adopt AI in their work (not just for code generation or code evaluation), giving them a sensible competitive edge over the ones that shun the tech. It is crucial to embrace the opportunities that come with AI development. To do this, we must adopt the culture and practices of using these models responsibly. Irresponsibly applying AI to everything is a recipe for disaster, but not using the tech’s strengths is still a bigger risk.

At Scalefocus, we combine AI with our proprietary reusable technology (Accelerator Apps). This helps us boost the productivity and quality of our engineering output, bringing it to new heights.